Context Vector Optimization: From H1 Header to the Last Word.

Imagine that every word on the internet has its own unique postal code that not only determines its address but also its entire neighborhood – relationships with other words, their meaning, and the sense they create together. This is the essence of context vectors, a revolutionary concept that is changing the face of search engine optimization (SEO). In an era where algorithms like Google BERT and MUM strive to understand language at a human level, old techniques based on rigid keyword matching are becoming not only outdated but actually ineffective. The key to success is no longer just using the right phrase, but building around it a rich, logical, and semantically coherent context that resonates with user intent.

This article will guide you through advanced context vector optimization strategies. We’ll start with solid theoretical foundations, explaining what vectors are and how language models learn to understand the world. Then we’ll move to practice – showing how strategic use of heading structure, from H1 to H6, creates a skeleton for context that guides both algorithms and users. We’ll delve into advanced techniques such as semantic gap mapping and entity salience enhancement, and finally look at tools and the future that inextricably links SEO with artificial intelligence. It’s time to abandon keyword thinking and start thinking about meaning.

Fundamentals – What Are Context Vectors and How Do They Work?

Before we dive into the technical aspects of optimization, we must understand the fundamental change that has occurred in the world of search. We’ve come a long way from simple keyword counting to an era where machines learn to interpret intentions and nuances of human language. It is precisely this evolution that has led us to the concept of context vectors.

From Keywords to Meaning: The Evolution of Search.

The beginnings of SEO were relatively simple. Algorithms like early PageRank focused mainly on two factors: keyword density on the page and the number of incoming links. This led to unnatural texts filled with key phrases that were difficult for humans to read but easy for machines to interpret. However, this state of affairs could not last forever. Users expected more accurate answers, and Google had to meet these expectations.

The breakthrough was the introduction of semantic search. Instead of focusing on exact word matching, algorithms began analyzing the meaning behind the query. Updates such as Hummingbird, RankBrain, and finally BERT (Bidirectional Encoder Representations from Transformers) and MUM (Multitask Unified Model) revolutionized how Google processes natural language. These algorithms can now understand synonyms, polysemy (word ambiguity), and most importantly, the context in which a given word is used.

This means that the query “best restaurants in Warsaw near downtown” is understood as a need to find dining establishments with high ratings in a central location, not as a collection of separate words.

Vectors, Embeddings, and Multidimensional Space.

How can a machine “understand” meaning? The answer lies in embeddings – a way of representing words, phrases, and even entire documents as numerical vectors in multidimensional space. Each word becomes a point in this space, and its position is defined by relationships with other words. Words with similar meanings, like “car” and “automobile,” are located close to each other, while those with different meanings, like “car” and “banana,” are far apart. This geometric representation of meaning is the foundation of modern NLP.

Natural language processing (NLP) models such as Word2Vec, GloVe, or BERT learn these representations by analyzing gigantic text corpora (e.g., all of Wikipedia and billions of other web pages). Through this, they discover complex semantic dependencies that go beyond simple synonyms. The most famous example illustrating the power of embeddings is vector arithmetic:

Fun Fact: In vector space, you can often perform mathematical operations that reflect semantic relationships. The classic example is: vector(“King”) – vector(“Man”) + vector(“Woman”), which results in a vector very close to vector(“Queen”). This shows that the model has learned the concept of gender and social status as dimensions in its meaning space. Similarly, vector(“Paris”) – vector(“France”) + vector(“Poland”) gives a result close to the vector vector(“Warsaw”).

The Role of Context: How Vectors Understand Surroundings.

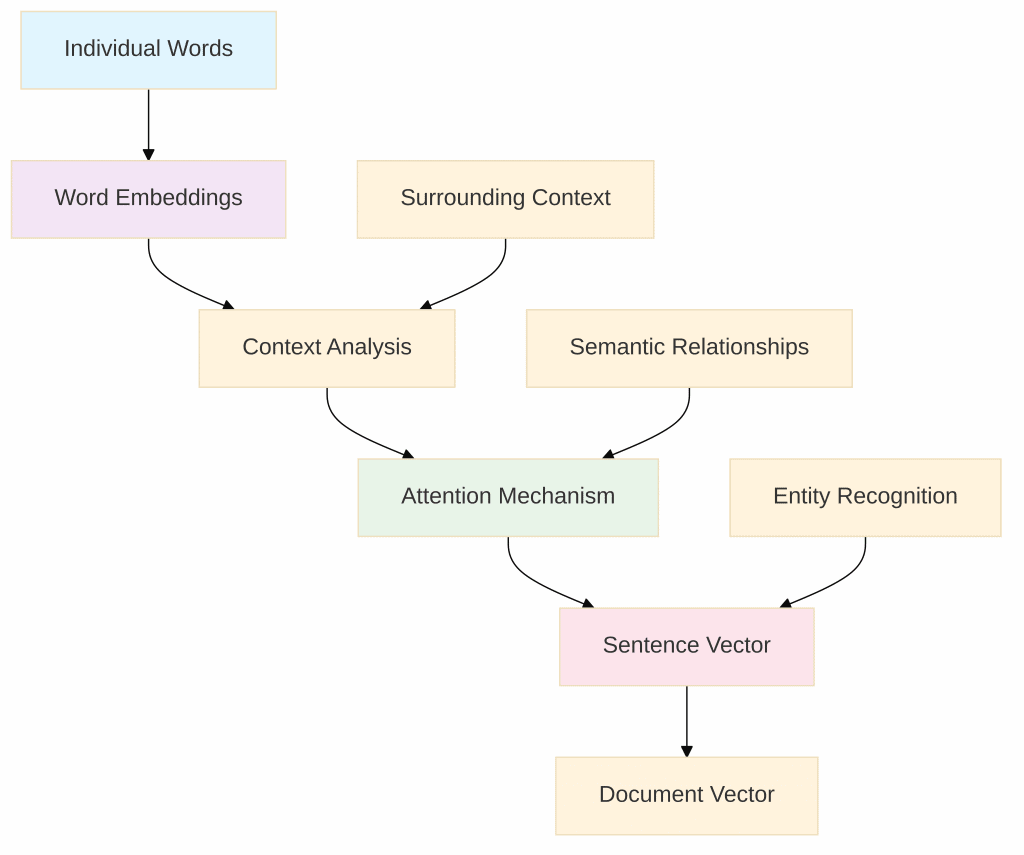

However, a single word is not enough. The real magic begins when we analyze context. The vector of the word “bank” will be completely different in the sentence “I went to the bank to deposit money” than in “We sat by the river bank.” Modern models like BERT are “bidirectional,” meaning they analyze words both before and after a given term to precisely determine its meaning in specific usage.

This mechanism, called the “attention mechanism,” allows the model to decide which other words in the sentence are most important for interpreting a given word. The vector of an entire sentence or paragraph becomes the resultant of component vectors and their mutual, complex relationships, allowing for capturing the overall sense of the state

Structure as Context Skeleton: Optimization from H1 to H6.

Since we already understand that context is king, we must learn how to build it. The most powerful and fundamental tool for this purpose is proper content structure based on heading hierarchy from H1 to H6. This is not just an element that facilitates reading, but primarily a semantic skeleton that gives direction and depth to the context vectors of our document.

H1: Lighthouse for Bots and Users.

The H1 heading is the most important structural element on a page. It can be compared to a lighthouse that sends a clear and unambiguous signal, informing both search engine algorithms and users about the overarching topic of the entire subpage. In the vector context, H1 defines the main directional vector – the central point around which all other vectors in the content will gravitate.

Creating the perfect H1 is the art of combining semantic precision with user appeal. On one hand, it must contain key entities and precisely describe the content, and on the other – be engaging enough to encourage further reading.

Let’s consider an example:

- Bad H1: “Our Offer”

- Vector analysis: The vector is very general, provides no specific information about the topic. It can be associated with millions of other pages that also have an “offer.”

- Good H1: “Advanced Digital Marketing Services for B2B Companies”

- Vector analysis: The vector is extremely precise. It combines entities such as “digital marketing,” “services,” “B2B companies,” creating a very specific and strong directional vector that unambiguously positions the page in semantic space

Heading Hierarchy (H2-H6) as Context Map.

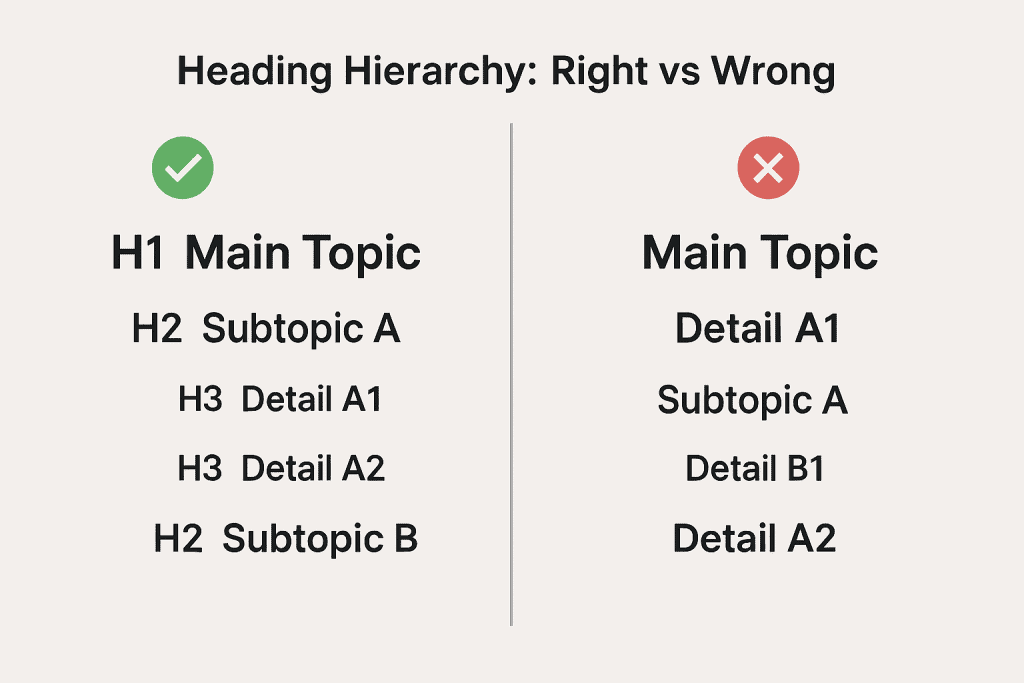

If H1 is the lighthouse, then headings from H2 to H6 are a detailed navigation map that guides through individual regions and thematic islands of our article. Proper, logical hierarchy is absolutely crucial. Each lower-level heading (Hn) should be a sub-vector that develops and details the parent vector (Hn-1). This creates a tree structure that is extremely readable for algorithms.

Never skip heading levels (e.g., from H2 to H4) or use them for purely stylistic purposes (e.g., to enlarge text). Such chaos in structure introduces information noise and weakens the strength of context vectors, making it difficult for machines to understand logical connections in the text.

“Contextual Vectoring” in Practice: Linking Entities in Structure.

Understanding hierarchy allows us to move to an advanced technique that can be called “contextual vectoring.” It involves consciously grouping related entities, their attributes, and synonyms within logical sections that are defined precisely by headings. It’s like creating thematic chapters in a book.

Imagine we’re writing a comprehensive article about “electric cars.” Our heading structure could look like this:

H1: Comprehensive Guide to Electric Cars in 2025.

H2: Key Battery Technologies in EV Vehicles

H3: Lithium-ion Batteries: Current Standard

H3: Solid-state Batteries: The Future of Automotive?

H2: Infrastructure and Charging Methods

H3: AC vs. DC Chargers: Differences and Applications

H3: Public Charging Stations vs. Home Wallboxes

H2: Range and Performance in Different Conditions

Such structure does more than just organize text. It actively builds deep, multidimensional context. The algorithm, analyzing the section under the H2 heading “Key Battery Technologies…,” immediately understands that entities “lithium-ion batteries” and “solid-state batteries” are subordinate elements of the same concept. This strengthens their mutual relationships and creates a strong, coherent thematic cluster that is much easier to interpret and rate higher by search engines.

The Role of Structured Data (Schema.org).

In addition to headings, structured data (Schema.org) is a powerful tool for strengthening context. This is a standardized vocabulary that allows explicit description of entities on a page in a way understandable to machines. We can, for example, mark a text fragment as a “Recipe” and then define its attributes: “preparation time,” “ingredients,” “calorie count.”

From a vector perspective, structured data acts like precise labels that eliminate any doubts about the nature of a given entity. If Google sees that a page uses Product, Review, and AggregateRating schemas, its context vector is immediately and unambiguously placed in the “neighborhood” of other product and review pages, significantly increasing its relevance for commercial queries.

Expert Quote.

The importance of creating unique and valuable content that goes beyond simple duplication has been emphasized for years by SEO industry leaders. Rand Fishkin, founder of Moz and SparkToro, put it this way:

“Take content which is largely duplicative and apply aggregation, visualization, or modifications to that duplicate content in order to build something unique and valuable and new that can rank well”.

These words, though spoken before the explosion of vector popularity, perfectly capture the essence of contextual optimization. It’s not about writing about the same things as others, but about taking existing information, analyzing it, and then building a new, unique, and better-organized structure based on it – a structure that creates new value and deeper context.

Advanced Content Optimization Techniques.

Mastering heading structure is the foundation on which we can build more advanced contextual constructions. Now that we have a solid skeleton, we can begin filling it with content in a way that maximizes its semantic depth and resonance with search engine algorithms. The following techniques will allow you to move from simple content creation to conscious sculpting in the vector space of meanings.

Semantic Gap Mapping and Filling (Semantic Gap Analysis).

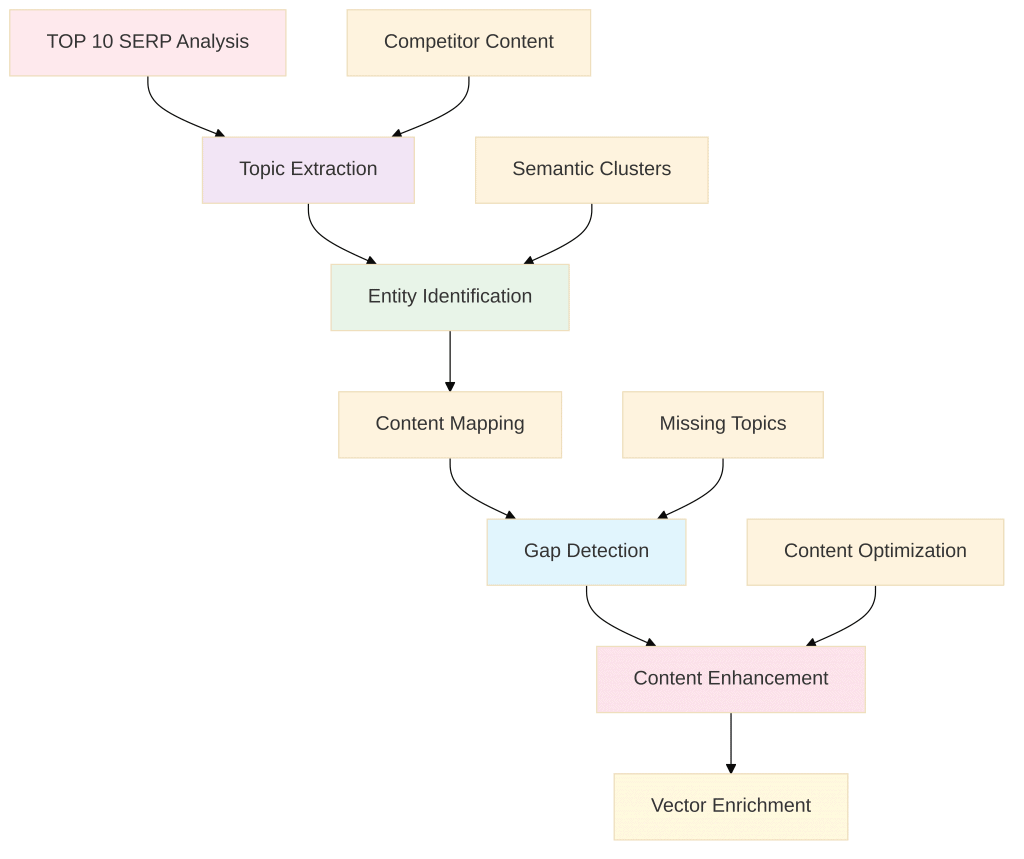

One of the most powerful strategies in content marketing is delivering content that is more complete and comprehensive than the competition. In the vector context, this means identifying and filling semantic gaps. A semantic gap is an important subtopic, question, or entity that is strongly related to the main topic, is present in competitor content occupying top positions in search results (SERP), but is missing from our article.

The semantic gap analysis process, though conceptually complex, boils down to several logical steps. It involves “mapping” the thematic territory covered by pages from the TOP10, then comparing this map with our own content to find “white spots.”

analysis would involve carefully reading competitor articles and identifying sections and headings that we haven’t covered. Supplementing content with these missing elements makes our context vector richer and more complete, directly signaling to algorithms that our article is a more comprehensive source of information.

Entity Salience Enhancement.

Not all entities in text are equal. Entity Salience is a measure that determines how important and central a given entity (person, place, concept) is to the entire document. Google’s algorithms can assess this priority by analyzing a series of signals. Our task is to consciously strengthen these signals for the topics most important to us.

Entity salience enhancement techniques include:

- Placement. Entities mentioned in the title, H1 heading, at the beginning of text, and in first sentences of paragraphs naturally receive higher priority.

- Frequency and co-occurrence. Natural but regular repetition of the key entity and its synonyms in the company of other, strongly thematically related entities strengthens its central position.

- Emphasis. Using bold (<strong>) or italics (<em>) for key terms can be a subtle signal to algorithms that these fragments are particularly important.

Fun Fact: The concept of entity salience can also be used by Google to evaluate E-E-A-T indicators (Experience, Expertise, Authoritativeness, Trustworthiness). If an author’s vector (created based on all their publications on the internet) is strongly related to the article topic vector, the algorithm may consider them a more credible expert in the given field. This shows how contextual optimization extends beyond a single page and encompasses the entire digital identity of the author.

Internal Linking as Context Network.

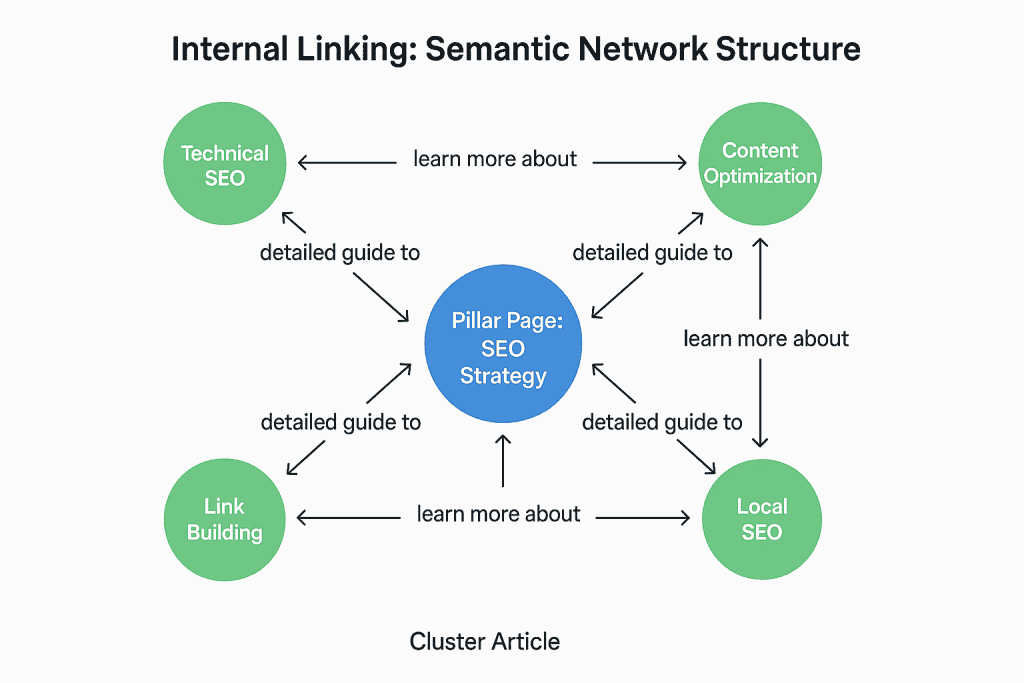

No article is a lonely island. Each subpage in a website is part of a larger ecosystem, and internal links are the blood vessels that connect them into a coherent, semantic network. In the vector context, internal linking is nothing other than creating “bridges between context vectors” of different documents. When we link from an article about “SEO strategies” to an article about “technical SEO,” we inform algorithms that the vectors of these two pages are related.

Strategic internal linking, especially in the pillar pages and topic clusters model, allows building enormous Topical Authority. The pillar page, broadly discussing the main topic, becomes the central hub, and cluster articles linking to and from it, detailing subtopics, create a dense network of connections that strengthens the context of the entire domain.

Anchor text, the clickable link text, plays a key role here. Precise anchor text (e.g., “learn more about technical SEO”) acts like a signpost that not only guides the user but also “informs” the algorithm about the target page’s topic, additionally strengthening its context vector.

NLP and “Passage Indexing” Optimization.

The final element of advanced optimization is writing with consideration for how NLP algorithms process text and how users consume information. Google’s introduction of “Passage Ranking/Passage Indexing” means that the search engine can identify and evaluate the value not only of entire pages but of their individual fragments.

This means we should structure our content so that individual sections can function as independent, valuable mini-articles that directly answer specific questions. This is the key to obtaining Featured Snippets and appearing in AI-generated responses (AI Overviews).

How to achieve this?

- Ask questions in headings. Use H2 or H3 headings in question form, which you answer directly in the paragraph below (e.g., H2: “How much does an electric car cost?”).

- Write concise, direct answers. The first sentence under such a heading should be a direct and complete answer to the question.

- Use lists and tables. Bulleted lists, numbered lists, and tables are extremely easy for algorithms to interpret and are often used in featured snippets.

This approach makes our content modular and “digestible” for AI, which can easily extract needed information from it and present it to users, even if the rest of the article covers a slightly broader topic. This is ultimate proof that contextual optimization is a game of precision and depth at every level – from the general H1 to a single, well-constructed paragraph.

Tools and Future.

Theory and advanced techniques are one thing, but daily SEO specialist work also requires appropriate tools. At the same time, to stay ahead, we must constantly look to the future, which is inextricably intertwined with artificial intelligence and new ways of processing information.

Tools Supporting Vector Optimization.

The SEO tools market is quickly adapting to the new, semantic reality. Although no tool will replace strategic thinking, many of them can significantly facilitate and accelerate the contextual optimization process. They can be divided into several main categories:

- SEO Content Editors. Tools like Contadu, or NEURONwriter are currently industry standard. They automate the semantic gap analysis process, comparing our content with top results and providing recommendations regarding terms, entities, and questions worth including in the text.

- Language Analysis APIs. For more advanced users, programming interfaces like Google Cloud Natural Language API or IBM Watson Natural Language Understanding allow deep, programmatic text analysis, including entity extraction, sentiment analysis, and salience assessment.

- Topic Research Tools. Platforms like AnswerThePublic, AlsoAsked, or Ahrefs (in “Questions” function) help understand what questions users ask around a given topic, which is invaluable input for “Passage Indexing” optimization.

However, remember that these tools work on correlation principles – they analyze what already ranks well. They are powerful support, but blindly following their recommendations without understanding the overarching strategy and user intent can lead to creating content that is generic and lacks unique value.

The Future of SEO: Vectors, AI, and Personalization.

We are witnessing another revolution in how information is accessed. The future of search is inextricably linked to three key concepts: vector databases, generative AI, and personalization.

- Vector Databases. Specialized databases like Pinecone, Weaviate, or Milvus are designed to store and instantly search billions of vectors. They form the foundation for a new generation of search engines and RAG (Retrieval-Augmented Generation) systems that first find the most vector-relevant knowledge fragments, then use language models (LLMs) to generate personalized responses based on them.

- Generative AI and RAG. Instead of lists of blue links, users will increasingly receive direct, comprehensive answers generated by AI. This makes optimization for “Passage Indexing” and creating precise, easy-to-interpret content fragments absolutely crucial for future visibility.

- Personalization. Search is ceasing to be anonymous. Algorithms, knowing our history, location, and context, will be able to deliver answers tailored to our individual needs. The query vector will be modified by our user profile vector, leading to hyper-personalization of results.

Larry Page, Google co-founder, predicted this future many years ago, saying:

“Artificial intelligence would be the ultimate version of Google. The ultimate search engine that would understand everything on the web. It would understand exactly what you wanted, and it would give you the right thing. We’re nowhere near doing that now. However, we can get incrementally closer to that, and that is basically what we work on.”

His words are more relevant today than ever. Every step toward better contextual optimization is a step toward that vision – a world where information is not only available but fully understandable.

Summary

We have moved from the era of keywords to the era of meaning. Context vector optimization is not another passing fad in SEO, but a fundamental paradigm shift reflecting the evolution of search engines themselves. The key conclusions from our journey are clear: success in modern SEO requires moving away from mechanical phrase stuffing toward building deep, multidimensional context. The foundation of this process is logical and hierarchical content structure that creates a semantic skeleton for algorithms.

On this foundation, we can apply advanced techniques such as filling semantic gaps, strengthening the priority of key entities, and building dense networks of connections through internal linking.

Ultimately, context vector optimization is not technical manipulation, but striving to best satisfy user informational intent. It is the process of creating content so clear, comprehensive, and well-organized that it becomes the best possible answer to the asked question.

With the growing role of AI in content generation, new ethical challenges also emerge. Optimization cannot lead to misinformation or manipulation, and authenticity and value for humans must remain the overriding goal. Start thinking about your content not as a single document, but as a a single document, but as a dense network of connected meanings that brings real value to the digital ecosystem. This is the future of SEO.